Building a data warehouse once seemed like a Herculean task involving a myriad of complex steps and deep expertise in data management. From grappling with database management to crafting intricate data models and navigating the ETL labyrinth, the journey could stretch out for months.

But we're in a new era now, where technology's rapid advancement has ushered in a suite of tools and platforms. These innovations make data warehousing accessible to a broader audience, simplifying what was once an arduous process. We're here to demystify the essentials of building a data warehouse, walking you through the prerequisites, a step-by-step guide, and best practices to ensure your success.

Building a Data Warehouse

What is a Data Warehouse?

AWe understand that at the core of strategic decision-making lies comprehensive and processed data. A data warehouse acts as a central repository where this data is stored after being extracted, transformed, and loaded - the ETL process - from various transactional databases, operational data stores, and other external sources. Our focus is on synthesizing vast amounts of data into a format that is stable, integrated, and optimized for querying and analysis.

Thanks to advancements in technology, setting up a data warehouse no longer requires an extensive time commitment or in-depth expertise in every data management discipline. We recommend considering enterprise data warehouse architecture that aligns with business needs and scales accordingly.

Benefits of a Data Warehouse

The implementation of a data warehouse system has proven to be a crucial step towards data consolidation and strategic analytics. Below, we outline a snapshot of the top-tier benefits that are transforming data management across industries:

- Consistency: By compiling data from disparate sources, it ensures uniformity across all information.

- Security: As a stable repository, a data warehouse provides a secure environment that maintains data integrity over time.

- Time Efficiency: Allows swift access to data, significantly reducing the time needed to gather information from individual sources.

- Data Governance: Streamlines adherence to regulations, offering a more straightforward approach to data compliance with standards such as GDPR and CCPA.

- Silos Elimination: By integrating data, a data warehouse breaks down barriers between different departmental systems, facilitating interdepartmental communication and collaboration.

The benefits extend further, enhancing business intelligence activities and anchoring the decision-making process in reliable, well-governed data.

Here's a straightforward table showcasing additional advantages:

Employing a data warehouse essentially equips businesses with the tools to better understand their data landscape, improve operational efficiency, and bolster strategic initiatives. As we continue to navigate through the processes and methodologies integral to building a data warehouse, it's evident that harnessing its full potential requires a thoughtful and well-planned approach.

Planning the Data Warehouse

Define the Goals and Objectives

Successfully planning a data warehouse starts with defining clear goals and objectives. It's a crucial task that aligns the data warehouse's capabilities with the strategic aims of the organization. We gather input from key stakeholders, including business leaders, IT experts, and end users, to learn what they expect from the data warehouse. It's not just about storing data; it’s about using that data to generate actionable insights that support informed decision-making. We also ensure that the goals we set are measurable, achievable, and tightly bound to our overarching business strategy.

Identify Data Sources

Once our goals are pinned down, we move to identifying various data sources that feed into the warehouse. A thorough cataloging of all available data points – from internal systems such as CRMs and ERPs to external data from partners and IoT devices – ensures that we have a comprehensive understanding of our data landscape. This step is foundational because it influences the integration process and data quality.

Identifying these sources aids us in mapping out the nature and relevance of each set of data, thereby tailoring our warehouse to suit our unique business needs.

Determine Data Warehouse Structure

The third pillar in planning a data warehouse involves determining its structure. Various data models cater to different types of data warehouses. For transaction-heavy warehouses, the Entity-Relationship (ER) model works best, while analytical warehouses benefit from using dimensional models like the Star Schema. This decision impacts everything from query complexity to data retrieval efficiency.

The chosen structure strongly dictates the performance and manageability of the data warehouse. Whether it’s enabling complex analytical queries or ensuring streamlined transactional records, the data warehouse structure is a fundamental building block of efficient data management.

Designing the Data Warehouse

Data Modeling

When we're diving into the realm of data warehousing, data modeling cannot be overstated. It represents the framework that shapes how data is stored, accessed, and managed. To deliver data models that are effective and can stand the test of time, there are a few guidelines that we adhere to:

- Align with Business Requirements: The model must reflect our initial step of identifying what the business needs. This includes pinpointing relevant entities, attributes, and their interrelationships.

- Adopt a Standard Notation: Utilizing industry accepted notations like ER diagrams or dimensional modeling increases clarity and ease of maintenance.

- Integrate Seamlessly: Our model must support the integration of data from varied sources, ensuring a unified structure.

It's imperative that the data model promotes data quality, ensuring accuracy and consistency, which is crucial for reliable analytics. Below is a table representing the core components that we consider in our data modeling phase:

This structured approach to data modeling sets the stage for robust data warehouse design that's equipped to handle future business demands.

ETL (Extract, Transform, Load) Process

The ETL process is the operational backbone of our data warehouse. This multi-phased process involves:

- Extracting data from numerous sources,

- Transforming it according to business logic or rules,

- Loading it into the data warehouse for analytics and decision making.

Deciding whether to employ batch or real-time processing will hinge on our specific data and performance requirements. The choice impacts how quickly and efficiently we can make data-driven decisions.

Our ETL process must be high-performing and scalable. Modern architectures often prioritize ELT (Extract, Load, Transform) processes, enabling powerful cloud data platforms like Snowflake and Databricks to handle immense scale and complex computations. The following table outlines a comparison of ETL and ELT processes:

We tailor our approach to suit business needs while ensuring we’re leveraging the best practices for performance and efficiency.

Data Quality and Cleansing

Achieving high-quality data within our data warehouse is pivotal. We can't expect to draw valuable insights from poor-quality, inconsistent data. Thus, we embed data quality measures right at the source and throughout the ETL process. This includes:

- Validation: Ensuring that data meets certain standards and formats before entering our warehouse.

- Deduplication: Eliminating duplicate records to prevent redundancy and confusion.

- Error Correction: Identifying and rectifying erroneous entries within the data set.

These cleansing procedures enhance the integrity of the data warehouse, making it a reliable source for critical decision-making. Our ongoing commitment to maintaining data quality involves setting in place stringent governance standards and routinely monitoring for potential discrepancies.

Implementing the Data Warehouse

As we delve into the process of building a robust data warehouse, it's essential to consider the integral components that make up its framework. Implementing a data warehouse goes beyond the design; it requires a thoughtful approach to the selection and setup of hardware and software, database configuration, and ETL processes to ensure smooth and efficient functionality.

Hardware and Software Requirements

In our experience, it's paramount to select the appropriate hardware and software that align with the scope and demands of the data warehouse. These choices impact performance, scalability, and cost. Often, we recommend adopting a cloud-first strategy to leverage the inherent benefits of cloud services. Below are the typical investments during the initial setup:

When considering software, it's not just about acquiring database licenses. It's also about selecting the right management tools that offer ease of use and flexibility. These might include data virtualization technologies or no-code data integration tools that simplify the process of connecting with various data sources.

Database Setup

Once we've established the hardware and software framework, we move on to setting up the database. This process involves establishing the development, testing, and production environments, which should reside on separate servers to mirror the best practices in software development. We ensure that the environments are optimized to handle the data loads and user queries efficiently.

Here are the key considerations for database setup:

- Environment Mirroring: To avoid server overload during environment transitions

- Selective Data Retrieval: Minimize data retrieval by targeting specific columns

- Vendor Limitations: Understanding nuances of OLAP vendors, like BigQuery and RedShift

These practices are critical to maximize workflows and query speed without incurring unnecessary costs.

ETL Development and Deployment

ETL processes are the backbone of data warehousing. We prioritize the development and deployment of these processes to ensure that data extracted from various sources is transformed accurately and loaded into the warehouse effectively. Real-time data integration has become an invaluable feature for many businesses requiring immediate data insights.

When deploying ETL processes, it's essential to:

- Validate Data Quality: Implement thorough testing to ensure data is accurate and reliable

- Enhance Scalability: Ensure that the ETL processes can handle increased data loads over time

- Monitor the Performance: Regularly track the efficiency of the ETL processes

The ETL phase is not just about moving data; it's about transforming it into a format that's ready for analysis, aligned with business logic, and assuring that it's loaded with high accuracy. Our commitment extends beyond just setting up the ETL processes—we're also focused on continuous improvement and scalability to meet future data demands.

Testing and Deployment

When building a data warehouse, testing, and deployment are critical steps that determine the robustness and efficacy of the platform. These steps reveal how well the system aligns with our business intelligence needs. We need to ensure our data warehouse stands up not only to our current demands but is prepared to adapt as our needs evolve.

Unit Testing

Unit testing is the first line of defense in the testing phase, ensuring each component of our data warehouse functions as intended. During unit testing, we focus on individual modules that make up the system:

- Data extraction routines

- Transformation logic

- Loading mechanisms

- Database schemas

- Stored procedures

By isolating and examining these discrete parts, we can promptly identify and resolve issues. It’s about guaranteeing that every piece of the puzzle fits perfectly before we start assembling the bigger picture.

Integration Testing

Once we've verified the individual components through unit testing, we move on to integration testing. Integration testing is crucial in understanding how these components communicate and operate together within the system. We're looking for any inconsistencies or errors that could arise when the units start interacting—often, these aren't visible at the unit level.

Key components for integration testing include:

- Data flows between modules

- ETL processes as a cohesive unit

- Interaction between the database and BI tool

At this stage, we use test data that simulates real-world scenarios to evaluate the combined operations, ensuring seamless functionality and data integrity throughout.

User Acceptance Testing

The penultimate step before deployment is User Acceptance Testing (UAT), where we validate the end-to-end system from the perspective of end-users. This phase is about confirming that the data warehouse meets the predefined business requirements and can handle real-world tasks effectively.

During UAT, we collaborate with stakeholders who perform tasks that they would in their day-to-day operations. Feedback from UAT is invaluable, as it directly impacts:

- User interface and experience

- Reports generation and dashboard functionality

- Overall system performance from the user standpoint

Our goal here is to ensure the data warehouse not only works technically but is also intuitive and efficient for those who will use it daily. User satisfaction at this juncture is a clear indicator of the success of our implementation efforts.

Maintaining the Data Warehouse

Data Governance

In building a robust data warehouse, we must establish a comprehensive data governance framework. Data governance ensures the overall management of data availability, usability, integrity, and security in an enterprise. Our approach involves several key practices:

- Implementing data cataloging tools: cataloging assists in maintaining an inventory of available data, making it easier for users to find and understand data assets.

- Defining data stewardship roles: stewards are responsible for the management of data elements in terms of quality, lifecycle, and policies.

- Enforcing data standards and policies: adherence to protocols maintains consistency and streamlines the data management process across the organization.

- Continuous data quality management: proactive measures are taken to correct data issues and prevent them from reoccurring.

Incorporating these practices, we ensure our data governance strategy aligns with our business objectives and regulatory requirements. It's vital to remember that data governance is not a one-time effort, but a continued commitment to maintaining data standards and procedures.

Performance Monitoring and Tuning

Monitoring and tuning the data warehouse is key to maintaining its efficiency and reliability. We regularly track system performance to promptly identify any issues that could impede functionality or hamper user experience. To illustrate our approach, here’s a summary of our monitoring activities and potential responses:

We'll often tune the data warehouse by refining indexing strategies and revising SQL queries to prevent bottlenecks before they impact performance. Real-time monitoring tools are essential to this proactive approach, allowing us to address minor issues swiftly, and mitigating the risk of them evolving into larger complications.

Data Security and Access Control

The security of our data warehouse is paramount; we prioritize protecting sensitive information and ensuring that access is controlled and monitored. Our security measures include:

- Implementing robust authentication and authorization mechanisms: only verified personnel can access sensitive data assets.

- Regular security audits: we inspect and update security protocols to keep pace with evolving threats.

- Encrypting data in transit and at rest: encryption acts as a fundamental barrier against unauthorized data breaches.

- Maintaining detailed access logs: keeping records of who accessed what data and when ensures accountability and compliance.

To balance access needs and security, we also institute role-based access control (RBAC). This approach grants permissions according to the roles of individual users within the organization. Here's a brief breakdown of RBAC levels and their access privileges:

By continuously applying rigorous data security measures and access controls, we provide a secure environment that aligns with industry best practices and legislative compliance such as GDPR and CCPA.

Conclusion

We've explored the intricate process of building and maintaining a robust data warehouse. It's clear that ensuring data integrity, optimizing performance, and securing sensitive information are pivotal to our success. As we move forward, let's not forget the importance of continuous improvement in our data strategies. By staying vigilant in governance, monitoring, and security, we'll keep our data warehouse a powerful asset for informed decision-making

Looking to do more with your data?

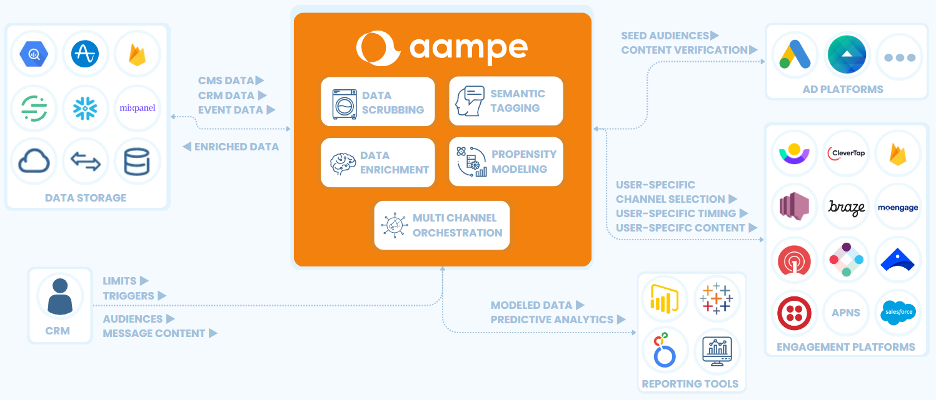

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!

.png)