The essence of data pipeline automation lies in its ability to streamline the flow from data extraction to the delivery of actionable insights. With automation, you can ensure that data quality stays maintained as it travels through the stages of your pipeline.

Moreover, it allows for continuous data ingestion and processing, which means that your data reflects the most current state of affairs. This real-time processing capability is particularly crucial in environments where staying up-to-date with the latest information can make or break your business outcomes.

As the volume and velocity of data continue to grow, embracing data pipeline automation is not just an improvement—it's an essential evolution. To achieve this, it's crucial to explore tools that can orchestrate these pipelines, such as Apache Airflow, ensuring that your workflows become more streamlined, adaptable, and future-proof.

Understanding Data Pipelines

Components of a Data Pipeline

Data Source: The inception point where your data originates, which may include databases, SaaS platforms, or even real-time streaming sources.

Ingestion: The process where data is collected from the data sources. This can be done in batches or a continuous streaming fashion.

Transformation: At this stage, raw data is converted into a more usable format, often involving cleaning, aggregating, and preparing the data. This step is sometimes called ETL (Extract, Transform, Load).

Loading: This is where transformed data is moved to its final destination, like a database or data warehouse.

End-point Data Storage: Your pipeline's destination, where data is stored for analysis. It could be a data warehouse or other storage system.

Data Processing Tools: These tools handle processing and may include batch or stream processing systems.

Workflow Schedule: Determines how and when the pipeline will execute, which can be triggered by time or data events.

Monitoring and Alerting: Tracks the pipeline's health, sending alerts if something goes wrong, thus ensuring data quality and pipeline reliability.

Maintenance: It encompasses updating, testing, and refining the pipeline to adapt to data changes or new requirements.

Data Pipeline vs ETL

The terms data pipeline and ETL are often used interchangeably, but there are differences.

While ETL is a sequence specifically designed for extracting data from sources, transforming it, and loading it into a warehouse, a data pipeline refers to the broader framework for data movement, including ETL as a part and potentially more, like ELT (Extract, Load, Transform) operations where transformation occurs after loading into the destination.

Automation in Data Pipeline

Data pipeline automation is revolutionizing how enterprises handle data by significantly enhancing efficiency and scalability. By automating workflows, businesses can expedite data processing and decision-making while minimizing errors and reducing costs.

Benefits of Automation

Increased Productivity: Automation enables teams to focus on strategic tasks by reducing the hands-on time required to manage data workflows. Automated data pipelines facilitate the efficient transformation and movement of data, leading to faster turnaround times for data-related projects.

Scalability and Speed: As your data-driven enterprise grows, so does the need for scalable solutions that can handle increased workloads without compromising performance. Automation lets you scale your data pipelines seamlessly, easily adapting to higher volumes of data and maintaining processing speed.

Cost Reduction: By cutting down on manual intervention, automation helps reduce operational costs. Automated systems are less prone to errors, decreasing potential expenses associated with data discrepancies and manual troubleshooting.

Examples of Automated Data Pipelines

- Functionality: Your data pipeline's design often includes extracting data from various sources, transforming it for analysis, and loading it into a destination store. When you automate this process, it responds to triggers systematically without human input, enhancing the speed and reliability of data-handling tasks.

Implementation Example: Imagine setting up an automated data pipeline that regularly ingests sales data from your online platform. Here's a simplified code sample in Python for a hypothetical data ingestion task using an automated job scheduler, often a feature of modern data pipeline platforms:

Leveraging data pipeline automation, systems can run such tasks on a schedule, ensuring your data repository is consistently up-to-date without constant monitoring.

- Practical Considerations: When planning for automated data pipelines, it's essential to think about the architecture that will best suit your needs. You may require a particular approach, such as stream processing for real-time data or batch processing for accumulated data. Establishing a clear strategy for your automation can lead to a more robust and responsive data ecosystem.

Key Technologies and Tools

Machine Learning and AI Implications

Machine learning (ML) algorithms greatly enhance data pipeline automation by enabling predictive analytics and pattern detection. Tools like Apache Spark offer ML libraries that facilitate scalable data processing and complex computations.

Data Pipeline Tools and Software

Selecting the right pipeline tools is critical for managing your data workflow. Apache Airflow is a widely used open-source platform that orchestrates complex computational workflows and data processing. Snowflake, another tool, simplifies the data pipeline's ETL processes through its cloud data platform, enabling more efficient data management and analysis in a multi-cloud environment.

Cloud Computing Platforms

Cloud platforms play a pivotal role in data pipeline automation. Azure Data Factory is a cloud computing service provided by Microsoft Azure that allows you to integrate disparate data sources. It can process and transform the data by leveraging various compute services securely and competently, positioning itself as a powerful solution for big data and analytics projects.

Designing a Data Pipeline

Designing an effective data pipeline is crucial for automating data flow from its source to its destination. This process involves implementing robust data processing strategies, ensuring efficient data orchestration, and maintaining a reliable schema management system.

Data Processing Strategies

The first consideration in conceiving your data pipeline is the data processing strategy. You will identify various data sources and determine the processing needs to transform raw data into a clean, usable format. Methods might include batch processing for large volumes of historical data or real-time processing for immediate insights. Efficient strategies ensure data reaches the destinations such as data warehouses or analytics platforms.

Data Orchestration

Data orchestration underpins the automated coordination of different parts of the data pipeline. It involves scheduling tasks, managing dependencies, and ensuring that each step, from extraction to loading into storage, operates smoothly. Platforms like Apache Airflow provide a programmable environment to design robust data workflows, coordinating the data lifecycle from ingestion to insights.

Schema Management

Lastly, schema management is essential for maintaining the structure and organization of your data. It includes defining a metadata model and managing changes to the schema. Proper schema management is vital for data quality and compatibility between systems. Effective tools for managing schema evolution significantly reduce the maintenance burden and prevent data pipeline failures.

Data Sources and Acquisition

Types of Data Sources

Data sources can be classified broadly into structured and unstructured systems. Structured data sources, such as SQL databases, are highly organized and easily queried. Unstructured sources include log files or images, where data is not pre-formatted for straightforward analysis. The lineage of your data, or its origin, plays a vital role in how it can be processed effectively.

- APIs: Serve as gateways to structured, often real-time data, enabling constant data flow.

- Files: Often come in the form of CSV or Excel files and provide batch data, which can be large but less dynamic.

Data Collection Methods

Collecting data efficiently is critical for building a reliable data pipeline. This acquisition can occur in two primary fashions:

- Streaming Data: Ingested in real-time, streaming data is continuous and provides up-to-the-moment information, useful for time-sensitive decisions.

- Batch Data Pipeline: Involves collecting data in chunks at scheduled intervals, which can be more manageable but less immediate.

You'll often leverage tools that autonomously orchestrate the flow from these data sources, as mentioned in Data Pipeline Automation. Similarly, the construction and maintenance of automated data pipelines should account for both push mechanisms and webhooks, which interact differently with APIs and streaming data.

Example Code:

This simple code could be the first step in data acquisition, where you collect batch data through an API call. Remember to replace "YOUR_ACCESS_TOKEN" with your actual access token provided by the data source service.

Challenges in Data Pipeline Automation

You may encounter several significant hurdles as you seek to automate your data pipeline.

Managing Data Quality

Data quality and accuracy are non-negotiable requirements in data pipeline automation. Your data must be clean and reliable; otherwise, the insights derived can be misleading. Cleaning and validating data regularly helps to prevent the accumulation of technical debt, which can be costly and time-consuming to address later.

Overcoming Integration Complexities

The process of integrating disparate systems, applications, and data formats can be rife with complexity. When datasets from different sources must be transformed and combined, matching schemas and data types becomes a considerable challenge. To mitigate this, use strategies that simplify the process, like employing automated workflows that do not require additional manual coding or intervention.

Addressing Scalability Concerns

Your data pipeline must have the ability to handle an increasing amount of work to accommodate growth. Scalability is a crucial factor, but performance issues may emerge as the volume of data increases. Downtime for maintenance or upgrades can affect the continuity of business operations.

Hence, focusing on building scalable pipelines is a protective measure against future capacity issues, as is made clear in discussions about data pipeline challenges.

The Data Pipeline Lifecycle

Development and Testing

During the development phase, your primary goal is to design the architecture of the data pipeline. This involves defining the workflow and selecting the appropriate databases and tools that will be a part of the pipeline. Ensuring that the pipeline is scalable and can handle the expected data load is crucial.

Testing is an intricate part of this phase. Your data pipeline needs rigorous testing to validate the accuracy of data transformations and the performance under various conditions. Automated testing frameworks are often employed to expedite this process.

Deployment and Production

Moving from a staging environment to deployment and production is a significant step. It involves carefully releasing the data pipeline into an active environment to process real-time data. This requires a reliable deployment strategy to minimize downtime and avoid data loss.

Once deployed, the production pipeline demands constant monitoring to ensure it runs smoothly and efficiently. This phase also includes performance tuning and anomaly detection to address any issues proactively. It's essential to have a robust monitoring system, analyzing logs and metrics to maintain optimal pipeline operations.

Best Practices in Data Pipeline Automation

When automating data pipelines, consistency, efficiency, and adaptability are critical. This section will guide you through the core practices that ensure flexibility, scalability, and streamlined data flows.

Ensuring Flexibility and Scalability

To maintain a flexible and scalable data pipeline, you need an architecture that can adapt to changing data volumes and variety. This means choosing tools and platforms that support scalable data structures and can accommodate different types of streaming data pipelines.

- Modular Design: Create loosely coupled components that can be independently scaled.

- Cloud-based Services: Use cloud services for on-demand resource management.

For a data pipeline that adjusts to your growth without a hiccup, consider incorporating services that facilitate change data capture pipelines, as they can handle modifications in your data without full refreshes, enhancing both flexibility and scalability.

Streamlining Data Flows

To streamline data flows, it’s crucial to have a well-thought-out process that enables an efficient and consistent data flow.

- Data Orchestration: Automate the workflow of data processes to ensure data moves smoothly and efficiently through the pipeline.

- Continuous Integration/Continuous Deployment (CI/CD): Implement CI/CD to automate the deployment of data pipeline changes, fostering consistency and efficiency.

Through these methods, your data pipeline can become more efficient, allowing real-time data availability and quicker insights.

By adhering to these best practices, your data pipelines will be robust, efficient, and capable of growing with your needs, ensuring that they are a valuable asset to your data-driven initiatives.

Future Trends and Considerations

As the proliferation of data continues to scale, your understanding of the evolving dynamics in data pipeline automation will be critical. This section delves into pertinent shifts and emerging norms that are likely to impact the efficiency and approach of your data pipeline strategies.

Impact of No-Code Solutions

No-code platforms are revolutionizing the data pipeline domain, offering a significant shift towards empowering citizen integrators. Such solutions lower the technical barriers for non-specialists, affording you the agility to adapt to changes without extensive training or experience. The rise in citizen integrators indicates a trend where data pipelines become more accessible and democratized across various business units within your organization.

Looking to do more with your data?

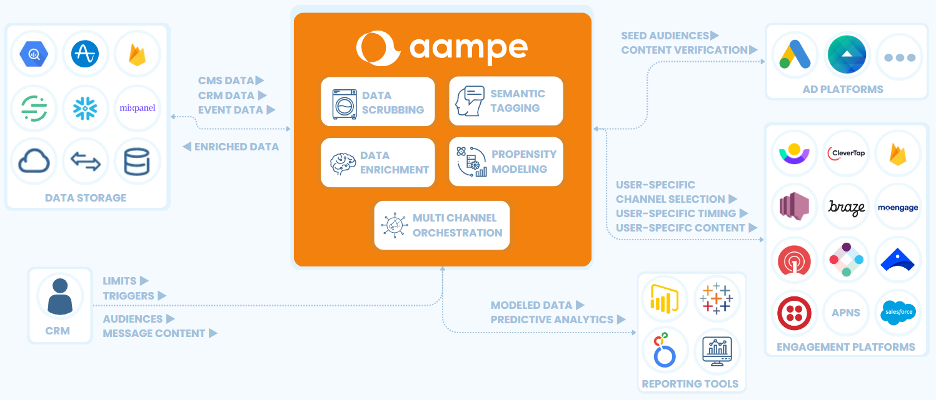

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!

.png)