BigQuery, Google Cloud's serverless enterprise data warehouse, allows for the seamless management and analysis of large datasets. When working with BigQuery, creating tables is a fundamental task that enables you to organize and store your data effectively. A BigQuery project is the top-level container for your data, and within each project, datasets provide a structured hierarchy to organize tables. Knowing how to create tables within these datasets is crucial to ensure robust data management.

You can create a table in BigQuery using various methods, such as the Google Cloud Console, command-line tool bq, or programmatically via an API. Each table you create resides within a specific dataset, and it's possible to define the schema manually or have BigQuery auto-detect it upon loading the data. Should you need to derive tables from the results of queries, this can also be achieved using the CREATE TABLE AS SELECT statement, which demonstrates the flexibility offered by BigQuery's data definition capabilities.

Understanding the structure of tables in BigQuery is integral as well. Each table consists of schema-defined columns and rows, where rows represent individual records and columns represent the attributes of the data.

BigQuery Fundamentals

BigQuery Architecture Overview

BigQuery's architecture is serverless, meaning you don't manage any infrastructure. As a fully managed database service, it enables scalable analysis over petabytes of data. It functions as a data warehouse for analytics and operates on an append-only storage model. When setting up BigQuery, you first create a project, identified by a projectID, which serves as a top-level container for your data.

Data Types and Schemas in BigQuery

Schemas define the structure of the data in BigQuery, dictating the fields and data types that your tables will contain. For instance, common data types in BigQuery include STRING, BOOL, INT64, and DATE. The official documentation on BigQuery data types provides comprehensive insights into all available options.

Working with Projects and Datasets

Your work in BigQuery is organized into projects and datasets, where a dataset serves as a collection of tables and views within a project, identified by datasetID. You can think of a dataset as a schema in a traditional relational database, grouping logically related tables. Each dataset is tied to a project to maintain organization and billing boundaries.

To create a new dataset in BigQuery, execute the following SQL command:

Replace your_project_id with your actual project identifier, and dataset_name with your desired dataset name. Remember that the datasets should be created within an existing project, and the structure of your datasets will determine how you organize and manage your data tables in BigQuery.

Preparing Data for BigQuery

Before you begin creating tables within BigQuery, it's crucial to ensure your data is well-organized and in a supported format for a smooth and efficient import process.

Data Formats Supported by BigQuery

BigQuery is versatile in terms of the data formats it supports for loading data. Commonly used formats include:

- CSV: Comma-separated values, an accessible data interchange format.

- JSON: JavaScript Object Notation, useful for nested data structures.

- Google Sheets: Direct import facilitated for spreadsheets.

Here's a practical guide for preparing your data:

When dealing with CSV files, ensure your data complies with RFC 4180 standards, while JSON files should be newline-delimited. The GDELT Book Dataset, representing an extensive collection of human society measurements, is an example of BigQuery's capability to handle large, complex datasets in CSV format.

Sourcing Data from External Platforms

BigQuery allows you to source data from a variety of external platforms. For example:

- From the internet archive, a real-time feed of books being processed, you can dynamically load datasets into BigQuery.

- Google Sheets provides an integration enabling direct querying of tabular data within your spreadsheets.

Note that when loading data, it is essential to consider data types inherent to your dataset, such as STRING, INTEGER, or FLOAT, and ensure consistency with the schema you define in BigQuery. Sourcing data from external platforms often requires an initial export into a BigQuery-friendly format like CSV or JSON; plan this step carefully to avoid data type mismatches or losses in precision.

Creating Tables in BigQuery

When working with Google BigQuery, creating tables is essential for structuring and storing your data effectively. You will have several options for this task, each suitable for different use cases and skill levels.

Using the Google BigQuery WebUI

The Google BigQuery WebUI provides a user-friendly graphical interface for creating tables. To get started, navigate to your BigQuery console, select your project and dataset, then click the CREATE TABLE button. You can manually fill in the table name and schema details from there. The WebUI also allows you to create a table if it does not exist by selecting the appropriate option. Make sure you have the correct permissions before you proceed.

- Click CREATE TABLE

- Enter your table name

- Use the Show options button for additional settings

- Click the Run query button to execute the creation

Creating Tables with BigQuery SQL

For more precise control or batch creating of tables, using BigQuery SQL might be the appropriate method. Execute a CREATE TABLE statement using the Run query button in the BigQuery WebUI. Here’s a sample SQL statement:

Use the CREATE TABLE IF NOT EXISTS statement to avoid errors if the table already exists.

Creating Tables Using the API

Alternatively, for programmatic table creation, the BigQuery API can be used. Specifically, making a jobs.insert API call assists in kicking off a table creation job. This option requires the use of service client libraries.

- Construct an API request to the BigQuery tables.insert method

- Include permissions to authenticate your request

- Define the table name, schema, and associated dataset

- Handle the response to confirm table creation

Creating a table using the BigQuery API involves a nuanced understanding of the client libraries and handling of JSON responses. For detailed coding examples and methodology, refer to BigQuery API documentation.

Remember to abide by all BigQuery quotas and limits to maintain the performance and avoid unnecessary errors when creating multiple tables or large datasets. Correctly utilizing these methods ensures a streamlined workflow in managing your BigQuery tables.

Best Practices for Table Creation

Ensuring Table Name Standards

To avoid errors and maintain consistency across your project, follow specific table naming standards. Use a clear and descriptive table name that reflects its content and function. It should be structured as projectname.datasetname.tableid, using hyphens (-) rather than underscores (_) to separate words. Ensure that table IDs are unique within a dataset.

Managing Access and Permissions

Assign appropriate permissions to control who can access and manipulate your tables. In BigQuery, access control can be set at the project level, dataset level, or table level. Utilize the principle of least privilege, granting only the necessary access rights required to perform a task.

Optimizing Tables for Query Efficiency

The schema design has a significant impact on performance. Define your schema explicitly to minimize ambiguity and potential errors. Select the right data types, and consider using partitioned tables to improve query performance and reduce costs. Use the standard SQL data type STRING for IDs to ensure compatibility.

When possible, refer to authoritative resources such as the Google Cloud documentation on creating and using tables and the BigQuery SQL reference to further elaborate on these concepts and provide practical usage examples with the right context.

Advanced Table Features

In Google BigQuery, mastering advanced table features such as working with views and table partitioning can significantly enhance your data science projects by improving query efficiency and data organization.

Working with Views in BigQuery

A view in BigQuery acts as a virtual table defined by a SQL query. Unlike traditional tables, views do not store data; they dynamically present it according to the specified SQL query. Here's how to create a view:

Creating and managing views with the BigQuery console streamlines data analysis. You can specify complex transformations and filters, enabling you to present tailored datasets to different users.

Partitioning Tables for Performance

Partitioning your tables in BigQuery can yield a high improvement in performance and cost savings. BigQuery supports various partitioning options, such as time-unit column partitioning and integer range partitioning, allowing for more efficient querying. For example, partitioning by date ensures that a query scans only the relevant partitions:

Partitioning and Clustering

Partitioning and clustering are two properties that significantly affect how BigQuery queries your data. Partitioning divides your table into segments, often by date, allowing BigQuery to scan only relevant partitions when querying. This reduces the amount of data scanned and costs.

For partitioning, you might use something like this in SQL:

Clustering, on the other hand, works alongside partitioning by ordering the data within each partition. Here's how to specify clustering in SQL:

Table Expiration

Another important property to consider is the table's expiration time. This setting automatically deletes your table after a certain period, which is particularly useful for temporary tables or datasets. You can set this directly in the BigQuery UI when creating a table or via SQL:

Loading Data into BigQuery Tables

To efficiently work with BigQuery, knowing how to populate tables with your data is crucial. BigQuery offers flexibility in loading data from various formats, but here we'll focus on CSV files and Google Sheets, two common sources for importing data into a BigQuery dataset.

Load Data from CSV Files

When you have a dataset in a CSV file, you can create a table in BigQuery using that data. Here's how you do it:

- Specify your file details:

- Source: The CSV file's location (local file system, Google Cloud Storage)

- File format: Confirm that the file is in CSV format.

- Set your table's schema: Define your table's structure, specifying the column names and data types.

- BigQuery job configuration:

- CreateDisposition: Determines if the table should be created if it doesn't exist.

- WriteDisposition: Dictates whether to append, overwrite, or fail if the table already exists.

Using the bq command-line tool, the above command initiates a job to load a CSV file (mydata.csv from the Cloud Storage bucket mybucket) into a table (mytable) in your dataset (mydataset). CREATE_IF_NEEDED and WRITE_TRUNCATE are used to automatically detect the schema (if --autodetect flag is set) and, if the table exists, its content is overwritten.

Importing Data from Google Sheets

Transferring data from Google Sheets into BigQuery is a seamless process:

- Prepare your Google Sheet: Ensure it is shared appropriately and accessible.

- Identify your sheet's attributes:

- Spreadsheet URL: The link to your shared Google Sheet.

- Sheet Name: The exact name of the sheet tab containing your data.

- Configure your BigQuery job:

- Dataset: The target dataset for your new table.

- Table: The destined table name within the dataset.

- WriteDisposition: Decide how to handle pre-existing data in the table setup.

The command line above demonstrates loading data from a Google Sheets file, identified by a given URL, into a designated table within mydataset. Again, --autodetect helps define the schema based on the Google Sheets data, and the --source_format=NEWLINE_DELIMITED_JSON indicates the structure of the source data.

By following these steps to load data from CSV files or Google Sheets, you'll have your BigQuery tables set up and ready for analysis. Keep in mind to adhere to BigQuery's best practices when loading data to ensure optimized performance and cost efficiency.

Maintaining and Updating Tables

Proper maintenance and regular updates are critical to ensuring your BigQuery tables continue to perform optimally. Understanding how to handle schema changes and troubleshoot common errors will save you time and protect the integrity of your data.

Handling Schema Updates

Regarding schema updates, it's important to note that BigQuery permits certain modifications to table schemas, such as adding columns and relaxing a column's data type. However, you cannot remove a column or change a column's data type once it’s been created. To update a table's schema, you can leverage the ALTER TABLE statement or utilize the BigQuery UI for adding new fields.

For example, to add a new string column named email_address, your SQL statement would look like this:

When planning schema updates, consider the following elements to mitigate errors and downtime:

- Backwards compatibility: Ensure existing queries and applications can handle schema changes without disruption.

- Default values: If your new columns require default values, you'll need to include them in your schema update.

- Testing changes: It's crucial to test schema changes in a separate environment before applying them to your production tables.

Troubleshooting Common Errors

Encountering errors during table creation or maintenance is not uncommon. Here are some steps to help you troubleshoot these issues:

- Verify permissions: Ensure your account has the proper permissions to modify table schemas and update tables.

- Valid SQL syntax: Use precise syntax; even small typos can cause errors in BigQuery.

- Check column constraints: Be mindful of NOT NULL constraints that may influence your ability to insert or update data.

- Understand BigQuery errors: BigQuery provides specific error messages and codes which can be referenced in the official documentation.

A common error message you might encounter is:

This indicates an attempted prohibited change to an existing column's type. Review the type change restrictions, correct the update statement, and retry the operation.

Remember, due diligence in planning schema changes and addressing errors can save you from data inconsistency and downtime.

BigQuery provides the tools and flexibility to accommodate a wide array of data-driven scenarios - with the right queries, your datasets transform into valuable insights that guide smarter business decisions.

Looking to do more with your data?

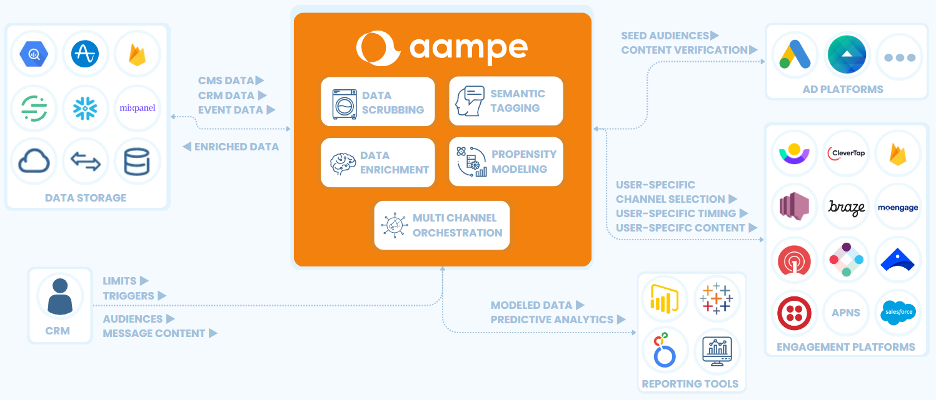

Aampe helps teams use their data more effectively, turning vast volumes of unstructured data into effective multi-channel user engagement strategies. Click the big orange button below to learn more!

.png)